What is SDXL v1.0

On July 22, 2033, StabilityAI unveiled the much-anticipated SDXL v1.0.

Stable Diffusion XL, or SDXL, represents the latest advancement in image generation models. Tailored for more realistic outputs, it surpasses its predecessors, including SD 2.1, by delivering more detailed images and compositions.

With the power of Stable Diffusion XL, you can now craft more lifelike images through enhanced face generation, produce clear text within images, and create more aesthetically pleasing art with shorter prompts. This breakthrough model is set to redefine the boundaries of image generation, offering users an unprecedented level of control and quality in their creations.

What are the new features in SDXL

SDXL introduces a host of new features that significantly enhance its capabilities:

- Exceptional Quality: SDXL v1.0 represents a substantial improvement over SD v1.5, SD v2.1, and even SDXL v0.9. Blind testers rated the images as the best in terms of overall quality and aesthetics across various styles, concepts, and categories.

- Competitive with Midjourney: This is a significant win for open-source generative AI models, as the quality of SDXL v1.0 is comparable to the latest versions of Midjourney.

- Greater Versatility: SDXL v1.0 can achieve more styles than its predecessors and has a deeper understanding of each style. You can experiment with more artist names and aesthetics than before.

- Simpler Prompts: Compared to SD v1.5 and SD v2.1, SDXL requires fewer words to create complex and beautiful images. There’s no longer a need for lengthy restrictive paragraphs.

- Resolution: SDXL v1.0 is trained at a base resolution of 1024 x 1024, producing images with improved detail compared to its predecessors.

- Aspect Ratio: SDXL v1.0 handles aspect ratios better. Its predecessors would produce many distortions under stretched aspect ratios.

- Retains All SD Features: SDXL retains all the features of SD; it can be fine-tuned for concepts to create custom models and LoRA, and can be used with the control net. We’re excited to see the creativity overflow as the community builds new models in the coming weeks!

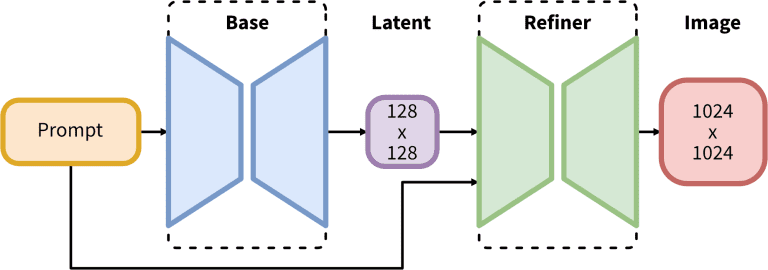

It’s worth noting that SDXL comes with two models and a two-step process: the base model is used to generate noise latents, which are then processed by a reducer model specifically for the final denoising step. The base model can be used on its own, but the reducer model can add a significant amount of clarity and quality to the images.

How to use SDXL 1.0 locally

The two most popular methods for running SDXL locally (on your own computer) are:

- AUTOMATIC1111’s Stable Diffusion WebUI: This is the most popular WebUI overall, boasting the most features and extensions. It’s user-friendly and easy to learn, making it a great choice for beginners and experts alike.

- ComfyUI: While this option is more challenging to learn due to its node-based structure, it offers significant advantages for automating workflows. Moreover, it has a substantial speed advantage, generating images 5-10 times faster than AUTOMATIC1111. Following is a guide on how to use ComfyUI to run SDXL.

Download SDXL models

The model includes 3, 1 basic model, 1 refined model, and 1 VAE model. The download address is as follows:

Place these files in the folder stable-diffusion-webui/models/Stable-Diffusion.

Place the VAE file in the folder stable-diffusion-webui/models/VAE.

Install/Upgrade AUTOMATIC1111

AUTOMATIC1111 can run SDXL as long as you upgrade to the newest version.

New installation

Follow these directions if you don’t have AUTOMATIC1111’s WebUI installed yet. This is the UI you use to generate images with:

Existing installation

For an existing installation of AUTOMATIC1111:

Navigate to your WebUI folder in the Command Prompt. The default name for this folder is stable-diffusion-webui.

As a reminder, the command cd <FOLDER NAME> is used to navigate into a directory (folder), the command cd .. is used to move up a directory, and the command dir is used to list all directories in your current directory.

For example, if your WebUI folder is located in the root (most top level) directory, you would type and press enter:

cd stable-diffusion-webuiWhen you’re in this folder, enter this command latest version of the WebUI:

git pullUsing SDXL Base model

Now run the WebUI (double click the file webui.bat).

Select the checkpoint you downloaded, sd_xl_base_1.0.safetensors. Select the VAE sdxl_vae.safetensors.

Here are the suggested initial setup configurations for SDXL v1.0. You’ll notice that these settings differ slightly from what you may be accustomed to in earlier SD models. I’ll delve deeper into each of these aspects in the following sections.

- Resolution: 1024 Width x 1024 Height (or greater)

- Sampling steps: 30

- Sampling method: DPM++ 2M Karras (or any DPM++ sampler)

Using the SDXL Refiner model

The Refiner model is employed to infuse additional details and enhance the sharpness of the image, making it particularly effective for realistic generations.

In practice, you’ll utilize this feature through the img2img function in AUTOMATIC1111 with low denoising (low strength).

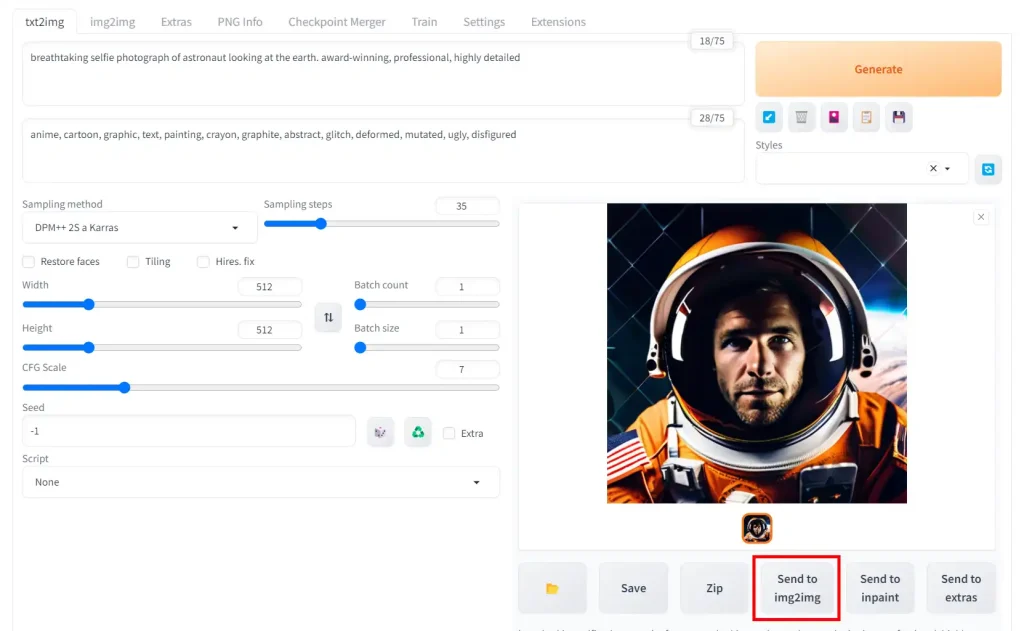

You start by generating an image as usual with the SDXL v1.0 base model.

Then, beneath the image, you’ll select the option “Send to img2img”.

Your image will open in the img2img tab.

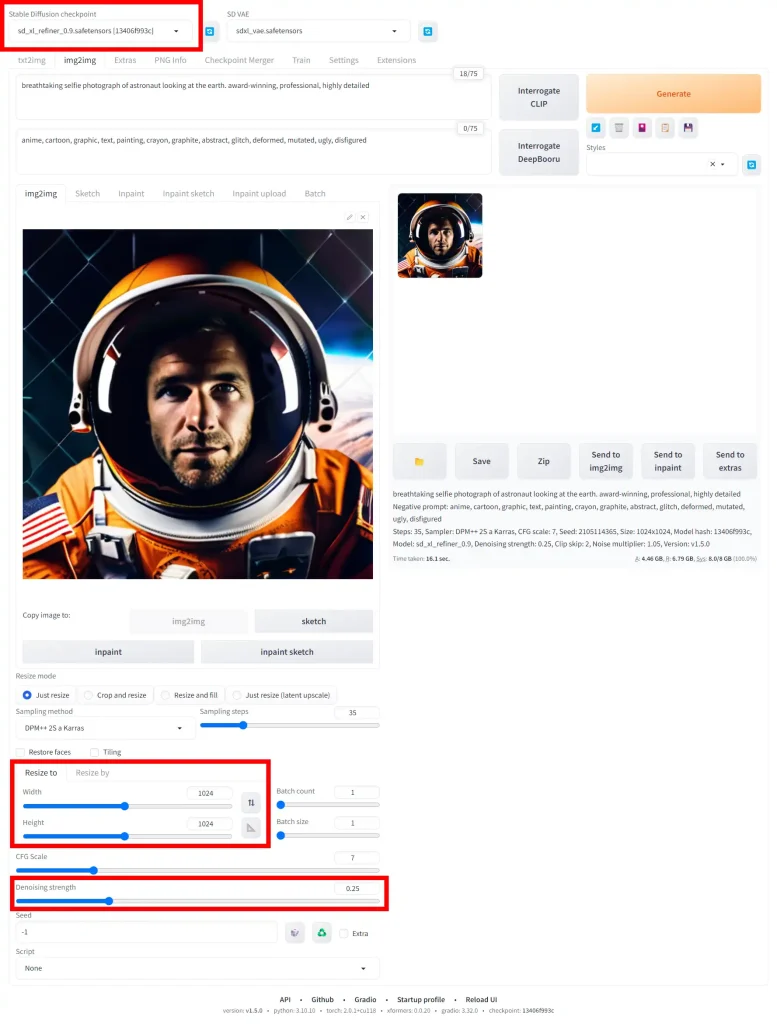

Make the following changes:

- In the

Stable Diffusion checkpointdropdown, select the refinersd_xl_refiner_1.0.safetensors. - In the

Resize tosection, change the width and height to 1024 x 1024 (or whatever the dimensions of your original generation were). - Bring

Denoising strengthto 0.25 (higher denoising will make the refiner stronger. While this can make the image sharper, it can also change the content of the image drastically)

Here are the outcomes generated by the refiner model using varying levels of Denoising strength. I suggest you identify the optimal balance that best suits your specific needs. In this instance, I find the generation with a denoising strength of 0.5 to be the most appealing.

Now it’s your turn to give it a try. With the knowledge and guidelines provided, you’re well-equipped to explore the capabilities of the SDXL v1.0 model. Experiment with different settings, find your sweet spot, and create stunning images. Remember, the journey of discovery is all about trial and error. So, don’t be afraid to make mistakes and learn from them. Happy creating!

Possible errors and troubleshooting

Error: torch.cuda.OutOfMemoryError: CUDA out of memory

SDXL does demand more VRAM compared to v2.1 and v1.5, so it might catch you off guard if you encounter a memory error, especially when previous models ran without a hitch.

Consider operating the WebUI with the –medvram command-line argument, which trades off a bit of speed for reduced VRAM usage. There’s also the –lowvram argument, which significantly slows down the process but further minimizes VRAM usage.

Model Takes Forever to Load

When you select the model in the Stable Diffusion checkpoint dropdown, the spinner keeps going forever.

Try these fixes:

- Update xformers (xformers: 0.0.20 or later)

- Disable your extensions

Update Xformers

Right click your webui-user.bat file and click edit (Click Show more options -> Edit on Windows 11). Select Notepad or your program of choice.

After the line: “set COMMANDLINE_ARGS=” add “--xformers“, so your file looks like this:

Save the file, and then double click it to run the WebUI and update xformers.

Disable extensions

Some extensions won’t play well with SDXL at the moment. We’ll disable them for now.

Go to Extensions tab in the WebUI -> Click the All button under Disable -> Click Apply and restart UI